Outscraper F.A.Q.

General

Scraping, harvesting, or extracting is the process of getting all the information from some public site. It automates manual exporting of the data.

The scraping and extracting of public data is protected by the First Amendment of the United States Constitution. The Ninth Circuit Court of Appeals ruled that automated scraping of publicly accessible data does not violate the Computer Fraud and Abuse Act (CFAA). Although, you should seek the counsel of an attorney on your specific matter to comply with the laws in your jurisdiction.

Every scraping task is running in the cloud. Your IP address will not be affected by the scraping.

We’re extracting only publicly available data, and the scraper works as a browser for data scientists, developers, and marketers.

The mechanism to guarantee PII-free data is to select what columns you want to return.

No. All scraping activities occur on Outscraper servers, ensuring that your IP address is not utilized for data scraping. It also means your computer can be turned off when extraction tasks are running.

Payments & Subscriptions

The invoice will be generated based on the usage of the services during the billing period (30 days). The prices are listed on the page.

Free Tier is the amount of usage you can use for free each month. For example, some products has a Free Tier with 500 requests per month. This means any usage of the product below 500 requests during a month will be free.

When a subscription is finalized, you will receive an invoice for the usage of the services during the subscription period.

In the event that you’re not able to make a payment within 3 days the system will try to charge you automatically.

- Before adding credits, make sure you entered your details on the billing information page (in case you need it in invoices or PO).

- Navigate to your profile page.

- Enter the number of credits you want to add.

- Choose the payment method you want to use in order to add credits (credit card, PayPal, etc.).

- After clicking the button with your payment method, follow the steps of the payment method provider.

Once you have some usage, you can see the upcoming invoice on the Profile Page. Once you have the amount due, you can generate the invoice manually by clicking “Generate Invoice”, or it will be generated automatically within 30 days.

Once you add credits to your account (prepaid option), you will receive the receipt for the transaction by email.

Invoices with the usage of specific services will be generated after each billing period (30 days). Alternatively, once you have the amount due, you can generate the invoice manually by clicking “Generate Invoice” on the Profile Page.

Yes. Outscraper will charge your account balance $10 and issue an additional invoice with the amount due.

No. The task will be finished, and if the task usage is greater than your account credits, you will simply receive an invoice with the outstanding usage.

You can use limits to limit the amount of extracted data.

- Open Outscraper Platform.

- Navigate to the Billing Information page.

- Enter the necessary billing information you want to see on your invoices.

- Click the Save button. All your future invoices will be created with the information you have entered.

Refer a friend and start receiving 35% of your referral payments to your account balance. Your referral will receive a 25% discount on their first payment. Get your referral link now.

API

The limit of queries per second depends on the nature of the requests, the service, and the request parameters (amount of results, number of queries, etc.). The average QPS is about 20 (soft limit). However, Outscraper can scale according to your needs. Please contact the team in case you need a higher QPS.

Yes. API supports batching by sending arrays with up to 25 queries (e.g., query=text1&query=text2&query=text3). It allows multiple queries to be sent in one request and saves on network latency.

Navigate to the API Usage History page to see your latest requests.

Navigate to profile page->API token to create a new key.

The average response time is 3-5 seconds. But it might depend on the service (speed optimized or no) type and the number of queries per request (batch option).

There are a few key points you should follow to increase the throughput of the API.

- Make sure you are using the latest versions of the API endpoints. For example, prefer using Places API V2 instead of Places API V1. In the case of using SDK the last version of API will be used by default (e.g. google_maps_search()).

- Use batching to send up to 25 queries per one request (e.g., query=text1&query=text2&query=text3). It allows multiple queries to be sent in one request and save on network latency time.

- Run requests in parallel. Check out this example.

- Use a webhook to fetch results once it’s ready.

Some tasks can take time to extract the data. There are a few ways to handle timeouts.

- Use retries. Expect that some number of scraping requests might return an error or timeout. Usually, trying one more time solves the issue.

- Use async requests. A good practice is to send async requests and start checking the results after the estimated execution time. Check out this Python implementation as an example.

- Use a webhook to fetch results once it’s ready.

Google Maps Scraper

Sometimes Google adds other categories to your searches. For example, when you search for restaurants, you might see bars, coffee shops, or even hotels. This might lead to irrelevant data, especially when you are using minor categories like swimming pools.

Outscraper provides you with two tools that you can use to eliminate those categories.

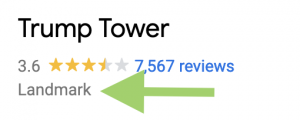

The landmark category on Google Maps

Use the “exact match” checkbox. The parameter specifies whether to return only the categories you selected or everything that Google shows. Make sure you are using the right categories by opening similar places on Google and checking the category.

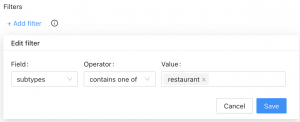

Filtering the results

Filter results by applying Filters to the subtypes column. Such filters can eliminate all the irrelevant data and return only what you need. To avoid empty results, make sure you are familiar with the values of the fields before using the Filters.

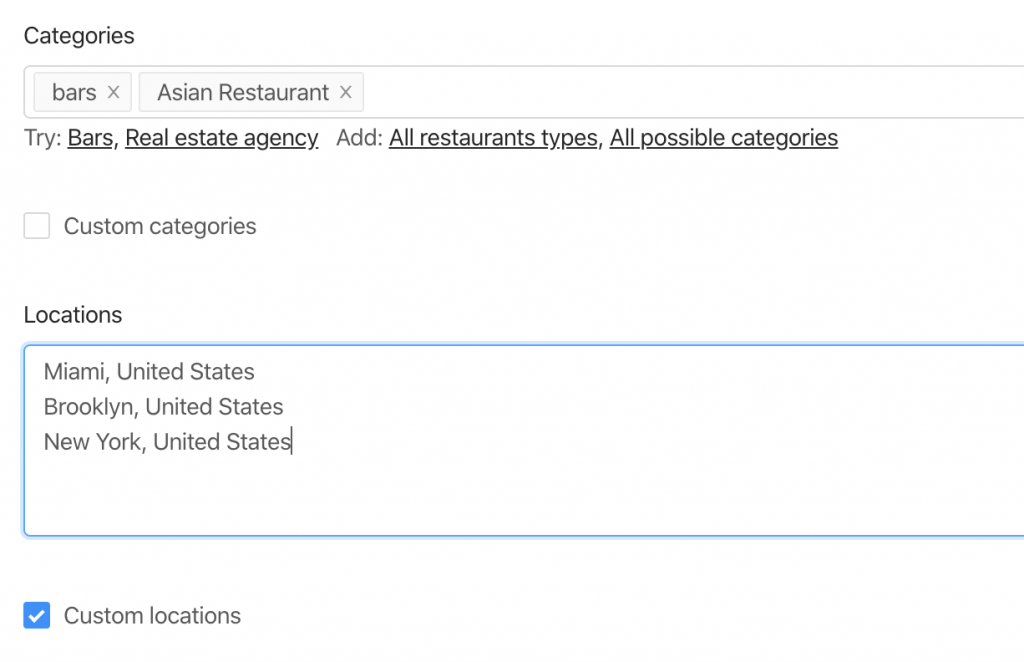

There are some cases when predefined locations or/and categories aren’t enough. Use “Custom locations” or/and “Custom categories” options to insert the categories or/and locations you need.

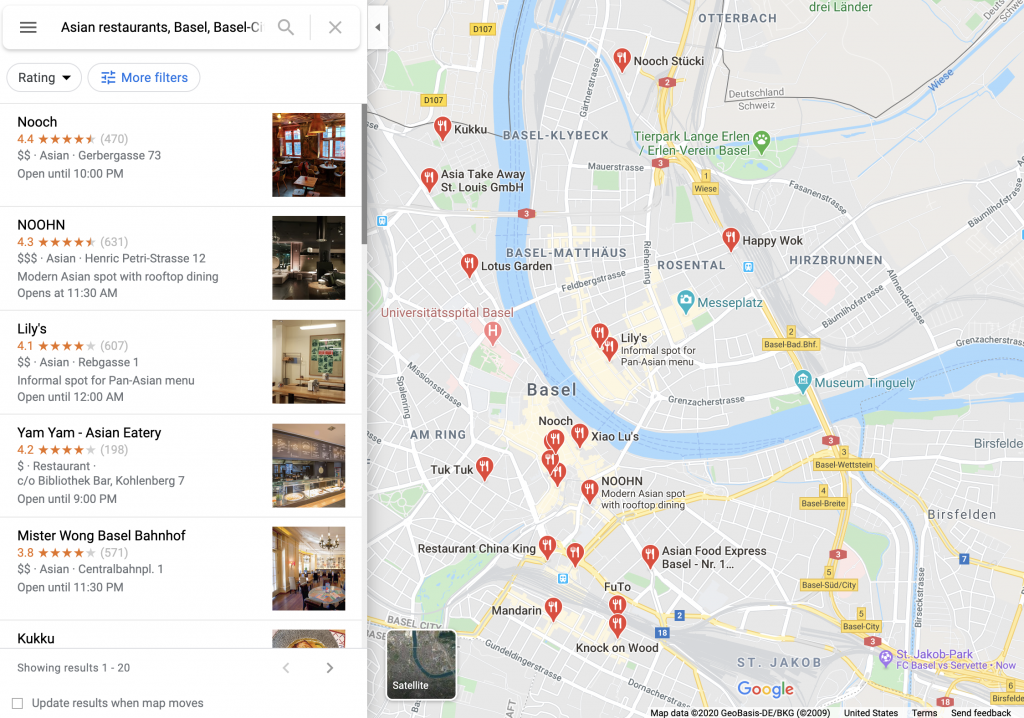

There is a limitation in Google Maps that shows only up to 400-500 places per one query search. This may be a problem when there are more companies in one category. For example, for the query “restaurants, Brooklyn”.

To overcome this, we suggest splitting the location into sub-locations. For example, by using postal codes:

”restaurants, Brooklyn 11203”,

”restaurants, Brooklyn 11211”,

”restaurants, Brooklyn 11215”,

…

Or using queries with sub-categories:

“Asian restaurants, Brooklyn”,

“Italian restaurants, Brooklyn”,

“Mexican restaurants, Brooklyn”,

…

Check the “Use queries” switcher and enter the queries.

To force Google to search for particular companies only, enclose the term between quotation marks ” “. The ” “ operator is typically used around stop words (words that Google would otherwise ignore) or when you want Google to return only those pages that match your search terms exactly.

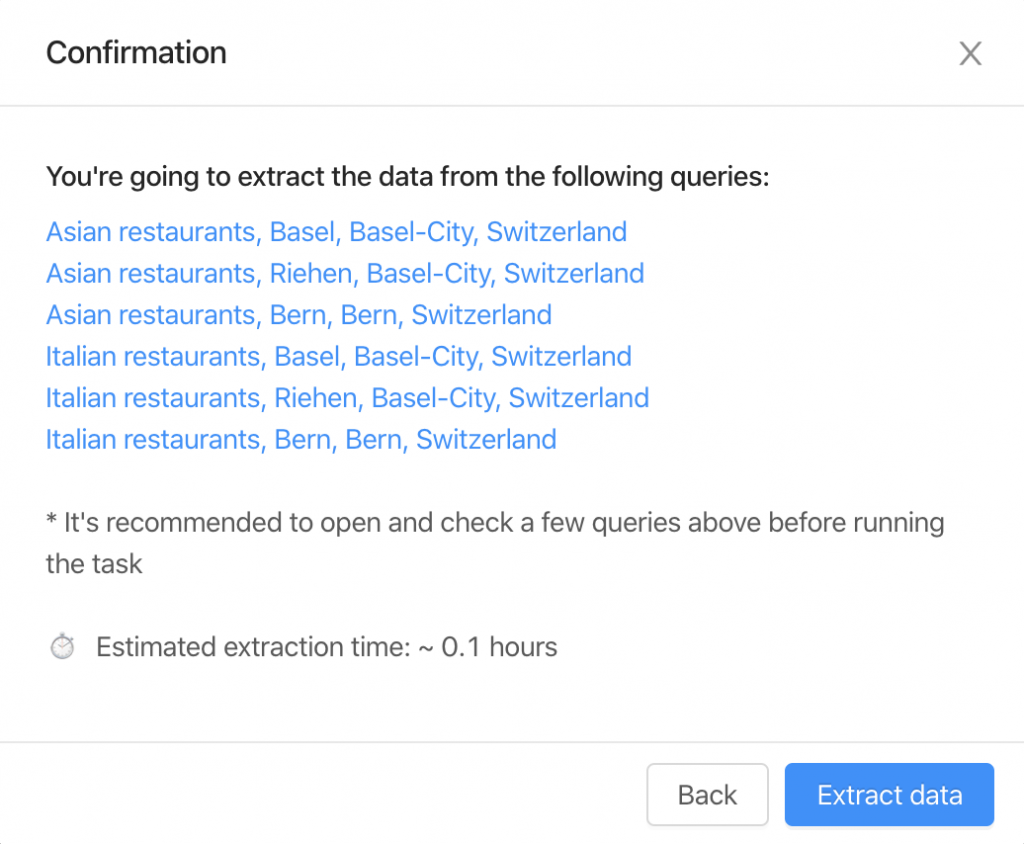

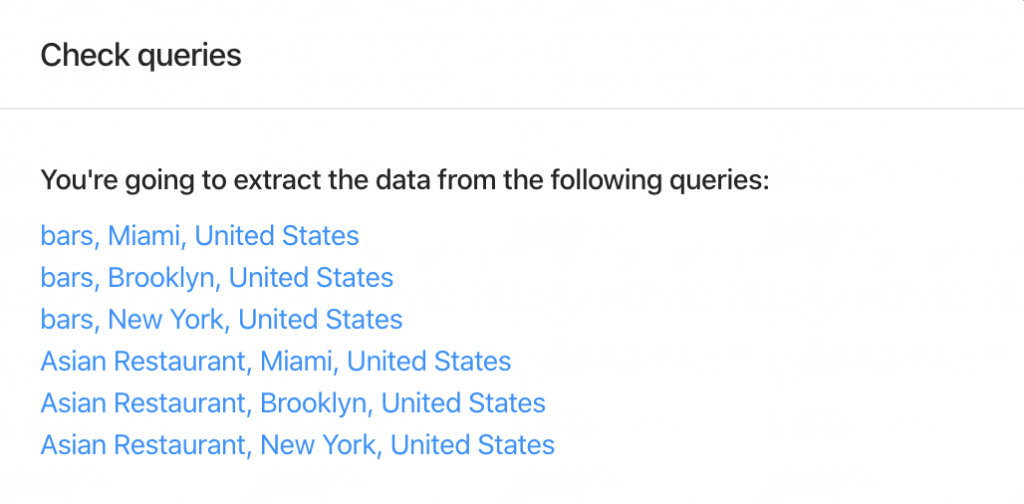

After clicking the button “Extract data…” you will see the task estimate and the queries.

It’s recommended to click and open a few queries to check how it looks on Google Maps site.

There are two things you should follow to control the expected number of results.

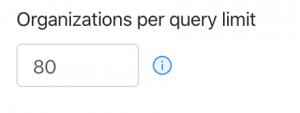

1. Organizations per query limit – the limit of organizations to take from one query.

2. Number of queries – the amount of search queries you’re going to make.

There are 6 queries the bot will make to extract the data from 2 categories and 3 locations.

Therefore, the resulting amount will be no more than 480 organizations (limit of 80 * 6 queries).

You can remove duplicates in one task by selecting the “Drop duplicates” checkbox.

Yes, you can drop duplicates inside one task by using the “Drop duplicates” checkbox (advanced parameters). Alternatively, you can drop it yourself by using the “google_id” or “place_id” fields as unique identifiers for a place.

The exact number of results will be known only after the extraction.

You can use “Total places limit” to limit the final amount of results scraped.

Yes. You can use the following link as a query: “https://www.google.com/maps/search/real+estate+agency/@41.4034,2.1718413,17z” where you can specify a query (real+estate+agency), the coordinates (41.4034,2.1718413) and zoom level (17z). You can find these values while visiting Google Maps.

Alternatively, you can use the “coordinates” parameter if you are using the API.

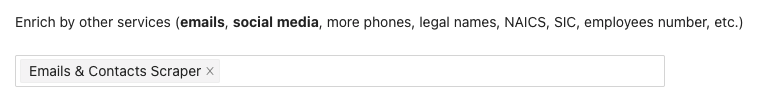

Yes. You can use Emails & Contacts Scraper along with Google Maps scraper to enrich the data from Google. In order to do so, select “Emails & Contacts Scraper” in the “Enrich by other services” section on the Google Maps Scraper page.

You will pay only for the results you extract, no matter how many queries you make.

No. There is no public information about emails connected with the listing on Google Maps. Outscraper uses external sources to find those emails.

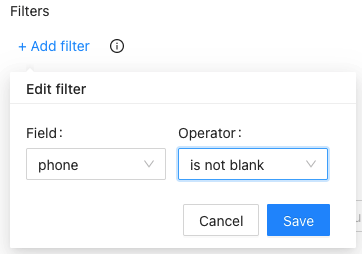

Yes. You can use filters from the advanced parameters with the following operator.

You can split your tasks by city/state or some other unique attributes. For example, you can extract places from New York in the first task and places from California in the second task.

Yes. To find businesses without websites, you can use our advanced search filters. Choose the ‘site’ field and set it to ‘is blank’. This will show you businesses that don’t have a website. If you want to see businesses with websites, just set the ‘site’ field to ‘is not blank’ instead.

To learn more about filters, please visit this article: https://outscraper.com/google-maps-data-scraper-filters/

Contact Us

Questions, special needs, issues... Always happy to hear from you.