İçindekiler

Understanding Why "Businesses with Websites" Matter

How to scrape businesses with websites only? Filter your scraping tools to return only business listings with a working website URL. According to the 2024 US small-business digital transformation survey, 70% of respondents had websites in the United States that year.

Businesses with websites matter for lead generation, and this is just one of the reasons. When a business shows up with a website, you immediately gain clearer signals about their niche, size, credibility, and digital readiness.

Companies with websites are more predictable to evaluate, and you can verify contact information faster.

Key Benefits of Targeting Businesses with Websites

- Higher-quality leads with accessible online profiles and verifiable information.

- Better segmentation by niche, industry, or online content type.

- Easier to assess digital readiness, including SEO performance, services offered, and website structure.

What is Web Scraping and How Does it Work?

Web scraping is the automated process of collecting and extracting data from websites. It allows you to gather structured business information such as company name, website domain, contact details, and industry category. Tools like Outscraper make this process simple, extracting public data quickly and accurately.

Web scraping is legal when you focus on publicly accessible information. It is intended for internal use, such as building lead lists, performing market research, or analyzing online trends. Avoid scraping sensitive, private, or restricted data to stay within ethical and legal boundaries.

By applying web scraping effectively, you can build high-quality business datasets without manual research, saving time and improving lead targeting.

How to Identify Businesses that Have Websites Only

The first step in building a high-quality lead list is identifying businesses that actually have websites. Focus on filtering your data sources so only entries with valid URLs are included.

Filtering Methods:

- Scrape business directories and include only listings with website URLs

- Exclude businesses without websites:

- In Google Maps, look for listings where the website field is empty or missing. These entries typically indicate businesses that rely solely on offline operations.

- On industry-specific directories, filter out entries marked as “No website” or entries o only list a phone number or physical address.

- Maintaining these exclusions ensures your list contains only verifiable web-presence leads.

Common Sources to Start With:

- Industry-Specific Directories – filter for entries that include website information.

- Google Haritalar – Manually copy and paste businesses with websites, or you can use Outscraper’s Google Maps data scraper to extract businesses with websites only.

Cross-Verification

Combine multiple datasets to ensure accuracy. Use public business registration or APIs and verify each business’s web presence. This step confirms the website is active and the contact information is valid.

Key Strategies for Scraping Businesses with Websites

The first step in building a high-quality lead list is identifying businesses that actually have websites. Focus on filtering your data sources so only entries with valid URLs are included.

Use Specialized Tools and API

- Outscraper'nin Google Haritalar Veri Kazıyıcı

- Extract business names, categories, business status, ratings, and reviews.

- Extract business names, categories, business status, ratings, and reviews.

- Filter businesses by the “with websites” field for precise targeting.

- Google Places API

- Find businesses and cross-reference them with the license database or website URLs.

- Email and Contact Scrapers

- Tools like Outscraper’s Email & Contact Scraper, Hunter.io, and Snov.io extract emails and social profiles linked to specific domains.

- AI Scrapers

- Platforms such as Outscraper’s AI-Powered Extractor, Browse AI, and Clay detect and extract structured website data automatically using AI.

Target High-Value Data Sources

- Online Business Directories

- Filter for listings that include a website field.

- Examples: Clutch, Crunchbase, Targetron, and local chamber of commerce listings

- Search Engines

- Use advanced Google operators, e.g., “digital marketing agency” + “website” + “Los Angeles”, to find results with URLs

- Scrape the resulting pages using Outscraper or browser extensions.

- Business Registration Databases

- Combine government-issued business lists with web scraping to confirm each company’s online presence.

Apply Effective Web Scraping Techniques

- No-Code Tools

- Drag-and-drop platforms like Outscraper, Octoparse, and ParseHub allow non-developers to collect website-based veri hızlı bir şekilde.

- Coding Frameworks

- Python Libraries such as BeautifulSoup or Scrapy support advanced custom scraping workflows.

- Dynamic Content Handling

- Selenium or Puppeteer handles websites that load content via JavaScript.

- Output

- Export your data to CSV or JSON formats, then import CRMs or enrichment platforms for lead management.

Best Practices and Ethical Considerations

When scraping businesses with websites, following ethical and technical best practices helps you stay compliant and maintain data quality. Responsible scraping protects both your reputation and the performance of your tools.

Check Permissions and Limits

Before running any scraper, always verify your activity respects the website’s rules and data privacy standards.

- Review robots.txt before scraping.

- Respect each site’s Terms of Service.

- Avoid scraping personal data or login-protected pages.

Manage Server Load Responsibly

Running scrapers at scale requires care to avoid overwhelming target websites.

- Throttle requests mimic human browsing.

- Schedule scrapes during off-peak hours.

- Use Outscraper’s built-in rate limiting and proxy rotation.

Use Proxies and Anti-Ban Measures

Proxies and anti-ban techniques help maintain continuity and accuracy when collecting data from multiple sources.

- Rotate IPs to avoid detection.

- Use reliable proxy services for large-scale scrapes.

- Keep logs of request timing and status for transparency.

Step-by-Step: Scrape Businesses with Websites Only Using Outscraper

This guide walks you through extracting businesses that have verified websites only, so you can build high-quality, ready-to-outreach lead lists.

Prerequisites

- Active Outscraper Account (Giriş yapın veya Sign-up)

- API key or Access to Outscraper’s Google Maps Data Scraper

Step 1: Define Your Target

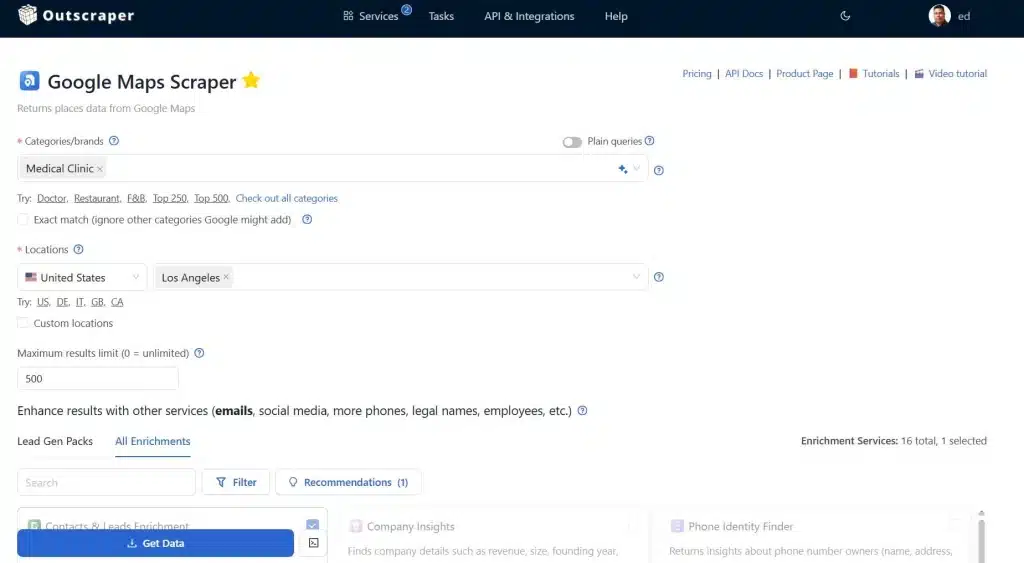

- Open your Outscraper Account, proceed to Hizmetler and select Google Haritalar Veri Kazıyıcı.

- Enter you target keywords or categories, followed by specific location. (e.g., “Medical Clinic in Los Angeles”).

- Enter maximum results limit (e.g., 500) but if you want unlimited results enter “0” or leave it blank.

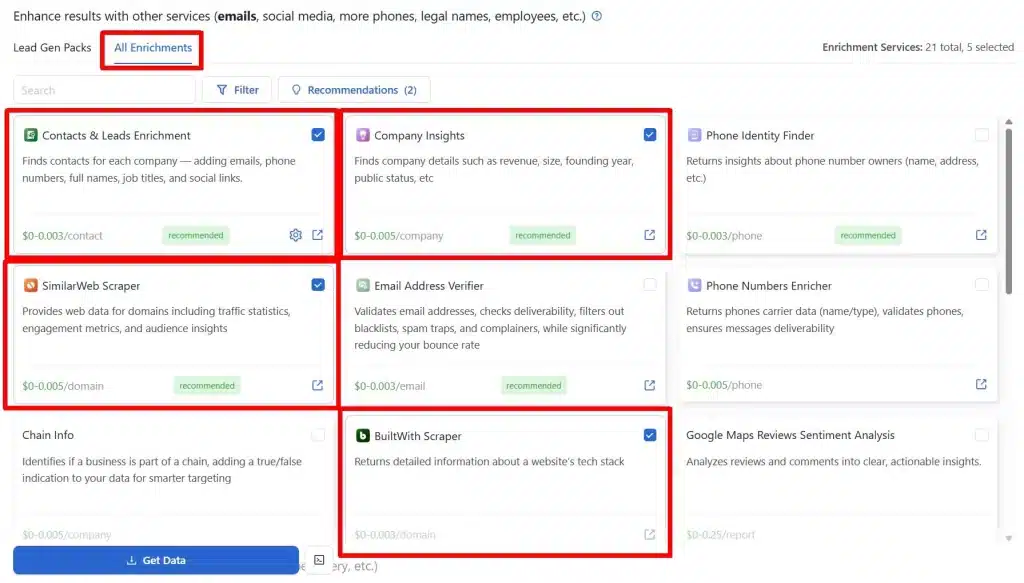

Step 2: Enhance Results Using Enrichment Tools

- Enhance results by using Outscraper’s Enrichment Features. In our example we will be using the “All Enrichments” tab and select Contacts & Lead Enrichments, Company Insights, SimilarWeb Scraper, BuiltWith Scraper, ve Trustpilot Kazıyıcı.

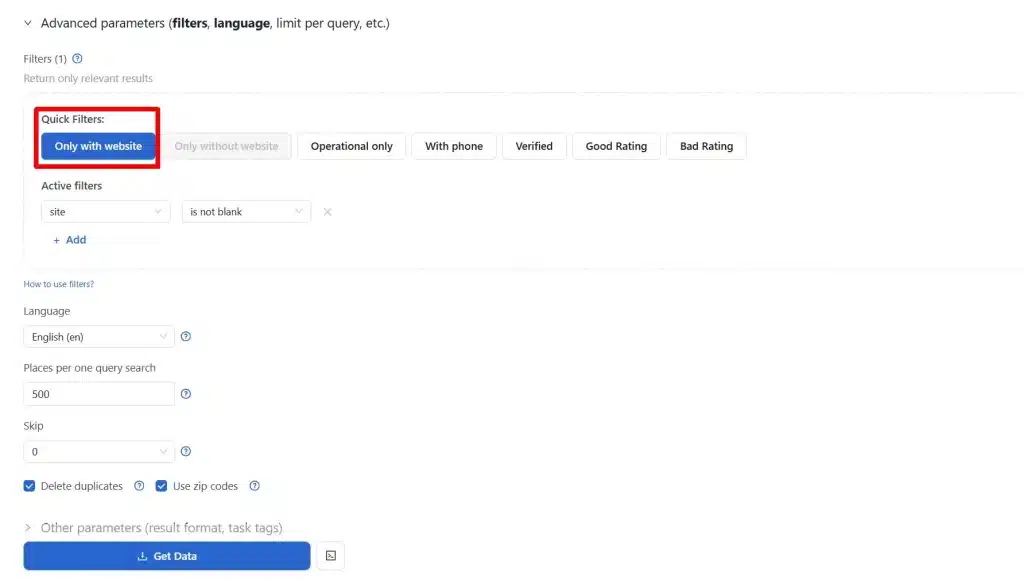

- Proceed to Advanced Filters and select Quick Filters “Only With Website.”

- Select Language (English), and Places per one query search (e.g., 500).

- Check Delete duplicates and Use Zip Codes and Other Parameters which will give you the result (e.g., CSV) format and task tags.

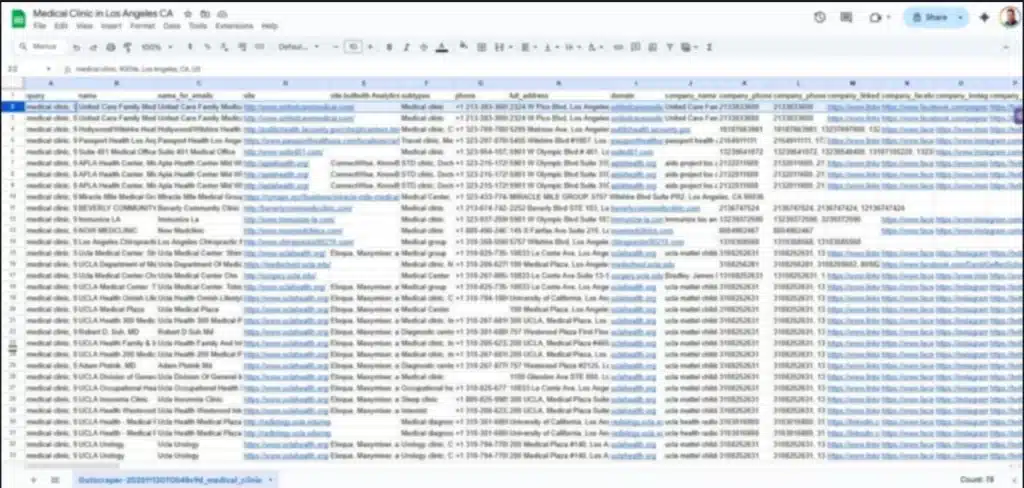

Step 3: Run the Task & Download the Data

- Start the scraper and monitor progress in the Task section of the Outscraper’s app.

Step 4: Validate the Data & Import Into Your CRM

- After scraping, review the dataset for inactive or broken websites.

- Export the enriched dataset to Google Sheets, HubSpot, Pipedrive, GoHighLevel, and other CRM or your preferred platform.

- Organized by location, industry, or company size for targeted outreach.

Next Steps

- Segment leads based on priority (e.g., high Domain Authority or large companies).

- Start personalized outreach campaigns or research competitors.

- Keep a regular schedule to maintain up-to-date lead lists. You can also use the Schedule feature of Outscraper. Aside from scheduling your tasks, you can Copy it as an API request, and even Use the same template for other categories or industries you want to scrape.

Sonuç

Focusing on businesses with websites allows you to target leads that are verifiable, easier to contact, and more likely respond. By combining Outscraper'nin Google Maps Data scraper with enrichment features like Contacts & Leads, Company Insights, SimilarWeb, BuiltWithve Trustpilot, you turn raw business lists into high-value datasets.

Filtering, validating, and importing this data into your CRM creates an organized workflow for outreach, competitor research, and local SEO campaigns. Following ethical scraping practices ensures your data remains reliable and compliant while minimizing disruptions.

Consistent application of these steps gives you a steady stream of qualified leads, helping your marketing and sales efforts become more precise and efficient.

SSS

SIKÇA SORULAN SORULAR

Targeting businesses with websites ensures your leads are verifiable, easier to contact, and digitally active. Websites provide insights into company size, niche, credibility, and online presence, making your outreach more precise and effective.

Use filters like “Only With Website” in İletişim or confirm that the website field is not empty in directories and Google Maps before exporting the data.

Yes. Outscraper provides multiple enrichment tools, including Contacts & Leads, Company Insights, SimilarWeb, BuiltWithve Trustpilot, to add verified contacts, company details, traffic metrics, technology stack, and review information.

Export the enriched dataset in CSV or JSON format and upload it to platforms like HubSpot, Pipedrive, or GoHighLevel. Outscraper’s API veya Integration Tools make this workflow seamless.

Rotate IP addresses, use proxies, apply rate limits, and schedule scrapes during off-peak hours. Keep logs to monitor progress and troubleshoot failed requests.